Steve Souders is currently doing more to improve the performance of web pages and web browsers than anyone else out there. When he worked at Yahoo! he was responsible for YSlow (a great tool for measuring ways to improve the performance of your site) and he wrote the book on improving page performance: High Performance Web Sites. Now he works for Google but much of what he’s up to is the same: Making web pages load faster.

I’ve been really excited about one of his recent project releases: UA Profiler. The profiler is a tool that you can run in your browser to determine the status of a number of network-performance-specific features that tie heavily to browser page load performance.

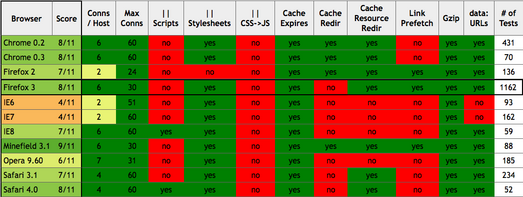

Here’s a look at the current breakdown:

We can see Firefox 3.1 taking a lead, fixing 9 out of 11 of the issues tested for. Firefox 3, Chrome, and Safari 4 all come after with 8 fixed. Firefox 2, Safari 3.1, and IE 8 next at 7. Those numbers help to give you an overall feel of the page load performance that you’ll see in a browser. (Naturally these tests don’t take any rendering or JavaScript performance numbers into account but network performance generally trumps their total runtime anyway.)

Information about network performance is important for two reasons:

- It informs browser vendors as to the quality of their browser. A browser fixing any of the points specified by the test will yield faster page loads.

- It informs web site developers as to the problems they should be taking into consideration when developing a site. For example if a browser they support doesn’t handle simultaneous stylesheet downloading perhaps their page should be re-worked.

The tests themselves can be broken down into a couple categories (Steve explains them all, in detail in the FAQ):

Network Connections

Two big things are tested here: The number of simultaneous connections that can be opened to a hostname (sub-domains count as different hostnames) and how many connections can be opened to any number of hostnames, simultaneously. These numbers can give you a good indicator of how many parallel downloads can occur (most commonly seen for downloading multiple images, simultaneously).

Additionally there is a check to see if the browser supports Gzip compression. The results aren’t too exciting here as all modern browsers support Gzip compression at this point.

Parallel Downloads

All browsers are capable of downloading images in parallel (multiple images downloading simultaneously) but what about other resources (like scripts or stylesheets)?

Unfortunately it’s much harder to get scripts and stylesheets to load in parallel since their contents may dramatically change the rest of the page. The loading of these resources occur in three steps:

- Downloading (can be parallelized)

- Parsing

- Execution

The load order breaks down like so (sort of an advanced game of rock-paper-scissors): Scripts prevent other scripts from parsing and executing, stylesheets prevent scripts from parsing and executing.

It’s been hard for browsers to implement the parallelization of script downloading since scripts are capable of changing the contents of the page – and possibly removing adding new scripts or stylesheets to the page. Because of this browsers are starting to get better at opportunistically looking ahead in the document and pre-loading stylesheets and scripts – even if their actual use may be delayed.

Changes in this area will yield some of the largest benefits to browser page load performance, going forward, as it’s still one of the most untapped areas of improvement.

Caching

While all modern browsers support caching of resources, caching of page redirects is much less common. For example, consider the case where a user types in “http://google.com/” – Google redirects the user to “http://www.google.com/” but only a couple browsers cache that redirect as to not retry it later.

A similar case of redirect caching occurs for resources, for example with stylesheets, images, or scripts. Since these occur much more frequently it becomes that much more important for browsers to cache every action that they can.

Prefetching

This is part of the HTML 5 specification and allows for pages to specify resources which should be opportunistically downloaded in case they should be used in the future (the common example of image rollovers could be used here).

There’s a full page describing how to use them on the Mozilla developer wiki but it isn’t that hard to get started. It’s as simple as including a new link element in the top of your site:

<link rel="prefetch" href="/images/big.jpeg">

And that resource will be downloaded preemptively.

Inline Images

The final case that the profiler tests for is the ability of a browser to support inline images using a data: URI. Data URIs give developers the ability to include the image data directly within the page itself. While this saves an extra HTTP request it’s important to note that the resource will not be cached (at least not as external resource – it may be cached as part of the complete page). The use of this technique will vary on a case-by-case basis but having a browser support it is absolutely important.

Going forward it will become increasingly important to have publicly-visible tests like the UA Profiler that are able to encourage browser vendors to act quicker at implementing critical browser functionality. Anything that’s able to, even indirectly, improve the performance of the browsing experience for users of the web is absolutely critical, in my book.

Dmitrii 'Mamut' Dimandt (November 24, 2008 at 11:07 am)

Wow. Useful indeed

Lenny Rachitsky (November 24, 2008 at 11:48 am)

No doubt Steve Souders is the biggest proponent of front side performance best practices. A few other things by him:

http://developer.yahoo.com/performance/rules.html

http://stevesouders.com/hammerhead/

http://stevesouders.com/cuzillion/

http://oreilly.com/catalog/9780596529307/

Boris (November 24, 2008 at 12:01 pm)

> Stylesheets prevent other stylesheets from parsing and executing,

> scripts prevent other scripts from parsing and executing,

> stylesheets prevent scripts from parsing and executing.

This is almost entirely wrong. In a naive implementation (say Gecko 1.7), scripts prevent everything else from parsing (and hence executing), as do stylesheets.

As a matter of programming model, the necessity is more or less that scripts prevent everything else from parsing (and hence executing) and that stylesheets prevent following scripts from executing. Stylesheets certainly don’t have to affect each other.

John Resig (November 24, 2008 at 12:43 pm)

@Boris: So just to clarify you’re saying that the statement “Stylesheets prevent other stylesheets from parsing and executing” is wrong – not all three of the statements.

Robert Kaiser (November 24, 2008 at 12:55 pm)

I wonder why my test run with SeaMonkey 2.0a2pre (today’s version) shows parallel scripts working but Minefield 3.1 is listed as not supporting this, despite using the same Gecko – is this something that was implemented recently and his summary algorithm favors e.g. what the majority of test runs resulted in?

vBm (November 24, 2008 at 1:14 pm)

Having latest nightly of Minefiled gives me 10/11 … pretty impressive :)

Results for your browser: Minefield 3.1 (10/11)

Connections per Host (HTTP/1.1): 15

Max Connections (HTTP/1.1): 30

Parallel Scripts: yes

Parallel Stylesheets: yes

Parallel Stylesheet and Inline Script: no

Cache Expires: yes

Cache Redirects: yes

Cache Resource Redirects: yes

Link Prefetch: yes

Compression Supported: yes

data: URLs: yes

Steve Souders (November 24, 2008 at 1:19 pm)

Wow, John, thanks for the nice write-up. The implementation of parallel script loading in Firefox 3.1 is a huge benefit for web page performance.

@Robert: Yes, the overall stats are based on majority (really, median). So if earlier versions of Minefield 3.1 didn’t support parallel script loading (which I believe is true), and there are more test runs on the early version, then UA Profiler shows that early result. This really comes down to how detailed of a browser version # to show. A feature on the todo list is to allow finer browser version granularity. Eventually, if enough people run with the “current” version, the true result will win out.

Steven Roussey (November 24, 2008 at 2:51 pm)

@Steve: Maybe you could expire test runs on browsers that are test versions instead — seems like an easier solution than creating a new version for every nightly.

Tom Robinson (November 24, 2008 at 3:30 pm)

I don’t understand why parallel script downloads are so hard: just download all scripts immediately but don’t execute them until they would normally be executed.

Jonas Sicking (November 24, 2008 at 5:53 pm)

> Stylesheets prevent other stylesheets from parsing and executing,

> scripts prevent other scripts from parsing and executing,

> stylesheets prevent scripts from parsing and executing.

In gecko (and I believe other engines as well) things are a bit more complicated than the above makes out. Basically there are a set of things that cause the parsing to block, and there are a set of things that are loaded even though the parser is blocked. Basically the diagram goes something like this:

| stylesheets | scripts | scripts after | resources

| block | block | stylesheets | loading

| | | block |

----------+-------------+---------+---------------+-----------

Firefox 1 | yes | yes | yes | none

----------+-------------+---------+---------------+-----------

Firefox 2 | yes | yes | yes | none

----------+-------------+---------+---------------+-----------

Firefox 3 | no | yes | yes | none

----------+-------------+---------+---------------+-----------

FF 3.1 | no | yes | yes | script

----------+-------------+---------+---------------+-----------

Basically the second column is always going to have to be ‘yes’. In all browsers. Otherwise you break document.write.

The third column is fairly important too, otherwise scripts running before the stylesheet is downloaded and applied are going to get the wrong values for things like .offsetLeft and getComputedStyle.

Where things have started progressing lately is that the fourth column has changed from ‘none’. I.e. browsers start looking ahead in the markup for things to download, even though the parser is blocked. This is what allows things like parallel downloading of scripts.

Recent Firefox 3.1 nightlies are passing the parallel script downloads test. I.e. for something like

The first script tag is going to block, but then we look ahead and see the following two scripts and download those while we are still blocked.

The reason recent nightlies are failing the “|| CSS->JS” test is that the test does

doNothing();

The is going to make us block until “foo.css” has finished loading (in case there is a call to .offsetTop in the script). We are going to look ahead here too, but since we only look for s when looking ahead, we won’t start downloading the .

So I believe the current tests are somewhat bad. The “|| Scripts” and “|| CSS->JS” tests have more differences than their names indicate. We don’t really do anything different between the two, yet we fail one and pass the other.

I think it’d be better if the tests instead of testing “|| Scripts” vs. “|| stylesheets” vs. “|| CSS->JS”, tested what things block, and what things are still downloaded during blocking.

There is also the matter of how far ahead the implementation look while the parser is blocked. Would be interesting to see what different browsers do there…

Steve Souders (November 24, 2008 at 9:14 pm)

Hi, Jonas. Thanks for the explanations and suggestions. I replied to some of your points below, but since it’s so long let me start with the conclusion: I understand why browsers do what they do for the default case; I just wish browser vendors gave web developers more built-in support for working around this default behavior in order to get faster pages.

You mention that scripts must always block the parser – otherwise document.write won’t work. I totally agree this is how all browser’s work today – except for Opera. Opera has a little known option, “Delayed Script Execution”, that causes scripts to be deferred but document.write still works. This option is off by default and the documentation says “not well-tested on desktop”, so I’m not saying this is primetime. But it shows that it’s possible for parsing to continue while scripts are downloaded. Should this be the default behavior? No – there could be dependencies on the document.write. But I would love it if this is how SCRIPT DEFER worked: a web developer could explicitly say a script should be deferred, parsing continues, and any document.write’s are preserved. This would be an awesome step forward in the world of ads. I wrote a blog post about this: http://www.stevesouders.com/blog/2008/09/11/delayed-script-execution-in-opera/

For the third column, “scripts after stylesheets block”, it is true that parsing a stylesheet and script in non-deterministic order could have unexpected results if the script relies on getComputedStyle based on the what the stylesheet did to the page. But I don’t frequently see this situation. When the web developer knows the script doesn’t depend on the stylesheet, I’d like to see an easy way for them to inform the browser so the page can be downloaded and rendered faster. Again, the web developer could use DEFER on the inline script and the browser wouldn’t block subsequent downloads (in other words, stylesheets and latter resources would all download in parallel as usual).

You mentioned there’s no difference between two of the tests, but that’s mostly because I shortened the titles in the table. If you think of “|| Scripts” as “|| External Scripts”, and “|| CS->JS” as “|| Stylesheet -> Inline Script” you can see the difference. (These expanded explanations are available in the FAQ.) Many web developers are surprised that putting an inline script after a stylesheet blocks all subsequent downloads. Three of the top ten US sites suffer from this inefficiency: eBay, MSN, and Wikipedia. In none of the cases does the inline script depend on the previous stylesheet, but because developers are unaware of how browsers handle this scenario, their pages are slower. They could have put the inline script before the stylesheet and shaved 200+ ms off an empty cache page load.

I am totally trying to test “what things block and what things are downloaded during blocking.” I knew that stylesheets and external scripts caused downloads to be blocked, and that a stylesheet followed by an inline script caused downloads to be blocked, so I wrote those tests. Let me know any other tests I should add and I’d be happy to do that.

Right now, web developers have to jump through hoops to get better performance from the browser, for example, loading scripts in iframes (GMail) and lazy loading scripts and stylesheets (My Yahoo). These techniques require more complex DHTML. If the implementation was simpler (for example, a better more widely supported SCRIPT DEFER attribute), these performance optimizations would be in the reach of wider web developer audience.

Jordan (November 25, 2008 at 12:47 am)

Steve: Good news for you! Firefox 3.1 has . (implemented by Jonas, because irony is amusing). It seems that since IE and Firefox support it, it wouldn’t take much pressure for the smaller browsers to catch up. Considering how much of your comment seems to want this, I think you ought to add this a 12th test (though also because a round dozen is better than 11).

jerone (November 26, 2008 at 6:10 am)

Looking at the detailed test results show that SeaMonkey 2.0 has a 10/11.

Amad (November 29, 2008 at 3:44 am)

@Jordan, Jonas

What is this ‘.’ you are talking about?

Bali (December 4, 2008 at 3:35 am)

A huge thanks to Steve Souders for his efforts. They are much appreciated, and are changing the web for the better!

Argoyne (December 4, 2008 at 10:20 am)

One issue with the test is user configuration.

In Opera the number of connections, both per host and global are in the main preferences. The default per host is 8 which the test shows as 4 other settings and their results (16, 8) (32, 16) (64, 22) (128, 24) (Opera setting, result from test) The results for this test seem highly variable.

Equally the default on Opera for max is 20 but it has the same options as above, this time the test results agree with the setting.

I’m not sure if any of the others tests are user configurable but the Cache Resource Redirect and Cache Redirect can be enabled from Opera’s config page by turning off the always check settings under Cache.

I realise that the idea with these things is a default settings test and that the non-user configurable things are much more interesting. I have a nasty tendency to play with random settings so a site such as this is fun as I can see them have an effect so thanks for that, anyway hope this is vaguely interesting to someone.

theludditedeveloper (December 15, 2008 at 1:07 pm)

I have just recently posted a possible solution that will speed up many websites using jquery and third components provided by companies like Infragistics, Telerik, and Component One.

I would be very interested in hearing your comments on this idea, and what it would take to get the browser manufacturers on board with this.

see post at:

http://theludditedeveloper.wordpress.com/2008/12/15/browser-support-for-jquery-infragistics-telerik-component-one-and-other-component-suppliers/

Grey (January 31, 2009 at 6:37 pm)

@Steve Souders: So… by turning 4 options in Opera, you can get a 10/11 score, right? I changed the 3 “Always check…” settings in opera:config and it showed 9/11. Now I tried again with “Delayed Script Execution” on and it showed 10/11. So basically, to score higher, all Opera needs to do is change default preferences? Of course that’d probably involve lots of Q/A and so on, but still…